Prompt Engineering and Fine Tuning

On several occasions people have asked me about training an Large Language Model (LLM) on their data assuming that it is required to get better results. Though that would most likely improve responses I suggest to them that it is important to think clearly about the improvements they'd like to see and the level of effort and resourcse they're willing to commit. With some careful analysis it may turn out that that prompt engineering may be sufficient and would be faster, cheaper and easier to implement.

This article gets more into the details of using LLMs for a higher level overview checkout Intro To Generative AI

Challenges with LLMs

The reason they are looking to fine tune a model. Is that they're facing challenges such as the ones below. However, several of these issues may be efficiently mitigated with prompt engineering.

Hallucinations - LLMs are trained to complete sentences not necessarily retrieve factual information. This can lead to them completing sentences in a non factual way. This can be incredibly useful in creative (and generative) situations and frustrating when a more factual response is desired. Additionally LLMs are often not very good at math and detailed reasoning. There are various prompt engineering techniques, some discussed below, that can help with this issue.

Up to date data - LLMs are trained with data collected up to a certain date. In the base case they don't have access to information after that date. However new developments in "tools" and "functions" along with a prompt engineering technique called In Context Learning (ICL) can be useful by inserting up to date information into the prompt that the LLM can used to answer the question.

Non public data - LLMs did not see your organizations private (or non-published) data, such as your employee handbook, during training so has no way to answer questions based on it such as "What is the family sick leave policy?". Again using ICL the relevant parts of the handbook can be inserted into the prompt and the LLM can be used to formulate a natural language answer as opposed to simply listing a set of links.

Non consensus knowledge - LLMs are trained on a large corpus of documents and common knowledge is reinforced more strongly than less common information. This can lead LLMs to answer based on the more common view point. Though you can get them to respond "in the style of" famous figures and frameworks you often want them to answer with a less common or more specific point of view or voice. Various prompting techniques can be used to help with this issue.

Running on the edge - Either to increase availability or reduce costs and latency many people want to run LLMs on smaller devices such as phones and lower powered computers. Getting the best performance and quality in these situations will take a combination of fine tuning an appropriate model and appropriately sized model as well as a variety of engineering tricks.

Privacy & security - If you use a third party LLM service you will have to submit your questions and information to them. This brings up many privacy and security questions. Depending on your needs the terms and conditions may satisfy your requirements. If not, you may be able to get a BAA or other agreement with company. If that is not viable then your other option may be to run your own, possibly fine tuned, LLM on premise or within your own private network and with your own security safeguards.

TL;DR:

- Prompt Engineering can get you pretty far if you take it seriously.

- Fine tune if you must, there are products and services that will help, but mind your budget.

- Run on prem if necessary but support your ops department.

In my opinion most production applications will use a mix of prompt engineering, generative & discriminative ML, and traditional software engineering and information retrieval techniques. There are many companies and organizations working on improving MLL ops and running a model on prem is possible but you'll need to carefully think through your requirements.

Prompt Engineering

Prompt engineering is the art/science of crafting the text/prompt that is submitted to an LLM in order to get the best possible and most useful response. It can be more than randomly trying things till something 'works'.

In particular it is important to create a formal or informal process for generating prompts and evaluating results. This can range from a simple note with reminders to try various prompting techniques to a custom program that can generate various prompts and track and evaluate the results.

Unlike traditional software testing, evaluating results can be tricky if you have anything other than straight simple factual responses. For example if you are generating empathetic natural language responses or children's stories or jokes there may not be an ideal way to automatically evaluate their quality.

Some approaches to keep in mind for evaluating your prompt engineering efforts include supervised (learning) evaluation when you know what the correct answer should be. For example "What is the capital of England?" Should produce the answer "London". However keep in mind that you may want to handle different variations of this answer such as "London, England" or "The capital of England is .." etc.

Another technique is to use a second more powerful LLM as an oracle to evaluate the answer. Surprisingly this can work because it is often easier to evaluate something than it is to generate it. Also, it can be useful when you want to fine tune, or distill knowledge from a larger model into a smaller LLM for a particular use case. Note this technique may be tricky if privacy is your main concern. But even then you may choose to run a second LLM for a short while on a larger machine while fine tuning the first to run with fewer resources.

You may be forced to review and rate the answers manually either by yourself or with co-workers. This is the most expensive and subjective approach. If this is necessary it may be helpful to look into active (Bayesian) learning techniques which help you find the tests that will yield the most information. So for example, if you find that your model is not doing well with a certain kind of response focusing on reviewing those kinds of questions would be more beneficial than spending resources reviewing all other responses.

With that in mind here are some techniques to help with prompt engineering. Note that the prompts below are short examples of what is possible. Your prompts will be longer, more detailed and specific to your use case.

Better (Zero-Shot) prompting

Git the LLM a role to use. For example do you want the answer to come from a person with a particular point of view or level of experience?

Define a clear task. The more precise and explicit you can be the better. You'll definitely become more aware of all the hidden assumptions in our every day language.

You are an experienced guidance counselor helping with college applications ...

Summarize this for a high school student ...Self Critique

Ask it to critique and rewrite its response in a follow up prompt. It is often easier to critique and rewrite something than to write it in the first place. Self-Refine: Iterative Refinement with Self-Feedback, Madaan et al.

What is ... [some hard problem] ...?

---

Analyze the previous answer and improve it based on your critique ...One-Shot prompting

Provide an example of the type of question and response you'd like to see. It is preferable if the example is directly related to the question being asked which brings up the issue of how to choose the right example.

Convert sentences from active voice to passive voice.

Example:

Active: The cat chases the mouse.

Passive: The mouse is chased by the cat.

Active: The chef cooks the meal.Few-Shot prompting

Provide several examples - this is an extension of the previous techniques which are discussed in Language Models are Few-Shot Learners, Brown et al.

Generate a synonym for the given word.

Word: happy

Synonym: joyful

Word: sad

Synonym: unhappy

Word: large

Synonym: big

Word: smallIn Context Learning

Provide contextual information in the prompt itself. This approach can help with hallucinations, up to date information, and non public data. The challenge again is finding the right relevant document fragments to include in the prompt.

Answer the question using the information below.

If you don't know the answer respond with "I don't know."

Don't make anything up.

Question: What is the company policy on X?

Resources:

... some segment of the employee handbook ...

... some memo that updates the handbook ...

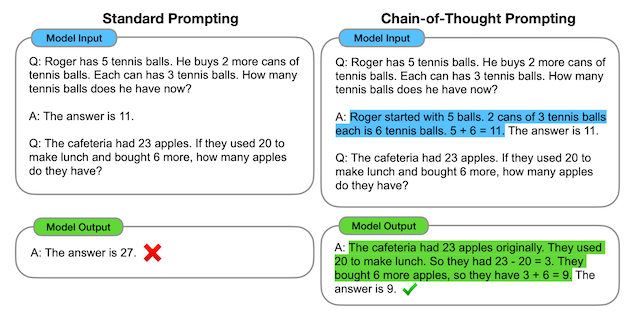

... etc. ...Chain of Thought (COT)

Provide an example or examples of intermediate reasoning steps for similar problems. Similar to few shot prompting but with appropriate examples with reasoning.

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models, Wei et al.

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models, Wei et al.

Zero Shot Chain of Thought

Lazy mans COT. Forces the model to reason and plan before answering.

What is [some hard reasoning problem].

Let's think this through step by step.

Tree of Thoughts (TOT)

Generalize COT to multiple experts. Can be simulated in a prompt but really needs code and multiple prompts. Expect to see advancements based on this technique that use advanced tree search to choose LLM responses when planning out longer range tasks. Tree of Thoughts: Deliberate Problem Solving with Large Language Models, Yao et al.

Imagine three different experts are answering this question.

They will brainstorm the answer step by step reasoning carefully

and taking all facts into consideration

All experts will write down 1 step of their thinking, then share

it with the group.

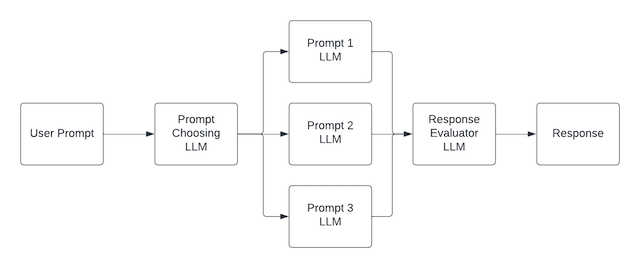

...Have an LLM choose your prompt

I couldn't resist the old school Yo Dawg! meme. Forgive me. But there has been a movement to use LLMs for more and more logic and reasoning. Some are even using them to choose or create a variety of prompts to send to other LLMs who's responses are then fed into another LLM for evaluation, rating or rewriting.

Agents, Plugins & Tools

Agents, plugins and tools are related in that agents are multi-step prompts/processes that use added functionality. This functionality can be in the form of a plugin if you are using OpenAI or other 3rd party LLM and a tool if you are using your own program to manage the prompting process.

In the example below the agent is initialized with two tools. One tool can search the Internet and the other can do math. The agent is given a prompt which can only be answered by finding recent information and then doing some math. It then figures out that it needs to use the tools to get that recent information and do the calculations.

The main difference between a plugin and a tool is that a tool is created, managed and called by a program you wrote while a plugin is widely available and is called by the OpenAI LLM.

tools = load_tools(["serpapi", "llm-math"], llm=llm)

agent = initialize_agent(tools, llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION)

agent.run("""What was the air quality yesterday in New

Brunswick? How did it change from the

previous day?""")OpenAI Functions

OpenAI recently introduced "functions". Functions are similar to plugin for your app but are only available for your specific program. Similar to tools you let OpenAI know that a function is available and how it can be used and if a prompt requires that functionality the response will indicate that you should call the function and provide the appropriate parameters.

In the example they give (and explained below) we have the capability to get the weather for any location. The function is just an example and always returns 72 degrees, sunny and windy. Given a prompt that could use that information the ChatGPT response will indicate that the weather function should be called and pass along the location the user specified. It is then up to your function to figure out the answer and return it to ChatGPT which then uses the information to create a natural language response.

// Dummy Function

def get_current_weather(location, unit="farenheit"):

"""Get the current weather in a given location"""

weather_info = {

"location" : location,

"temperature" : 72,

"unit" : unit,

"forecast" : ["sunny", "windy"]

}

return json.dumps(weather_info)

Define the function similar to an open api plugin spec

function_def = {

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

}Check responses for function invocation requests

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=chat_history,

functions=functions,

function_call="auto",

)

message = response["choices"][0]["message"]

if message.get("function_call"):

function_name = message["function_call"]["name"]

// Invoke the function and send the function result back to OpenAI.

Useful Libraries

Some useful libraries for prompt and context creation and management are LangChain, Llama Index and Haystack. These make it easy to get started, handle some of the complexity of sequencing prompts and managing context/memory but sometimes the abstraction gets in the way and you need to dig in deeper to figure out what is going on.

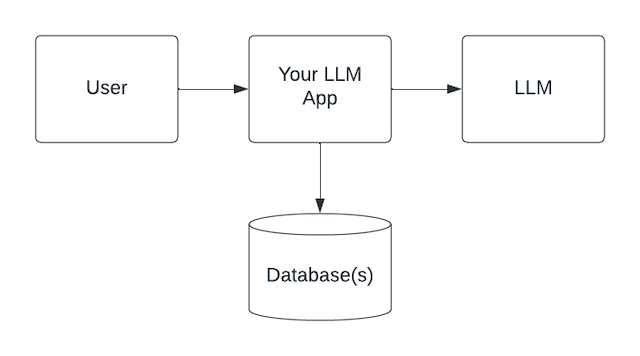

Useful Architecture

Relatedly an architecture that is becoming common is to write a program, using LangChain for example, that takes user prompts and enhances them with information from a database, submits them to an LLM, handles subsequent function invocations and then returns the final response to the user.

Note that your app can do any vetting, editing or censoring of the prompt or response you deem necessary. The database is used to get relevant information for ICL, examples for few shot prompting, as well as keep the history of the conversation(s) for long term memory.

The data source can be any internal or external data source you want to incorporate. Recently vector databases such as Pinecone and ChromaDB have become popular. These are super useful but in my opinion are not the final answer. In the future I believe we'll use a combination of relational databases, keyword retrieval, vector databases, knowledge bases, graph databases, etc.

Also, any part of this architecture can run in process, on premise, on a third party site or in any combination that is appropriate for your use case and the LLM can be a pre-trained 'foundational' model or one(s) specifically fine tuned for your application.

Running an LLM can be tricky and expensive

In general larger models have a great capacity to learn and can produce better responses if trained with enough appropriate data. However we can't assume that running the largest models will be viable. First lets look at model sizes.

GPT-2 had 1.5 billion parameters, GPT-3 175 billion, and the details for GPT-4 have not been released but are greater than 500 billion parameters by some estimates or even multiple models with over a trillion parameters in total. The open source models such as Llama, Falcon and others tend to come in 7, 13, 30, 40, 60 billion parameter versions. In general a parameter is a 32 bit (4 byte) floating point number so the 7 billion parameters translate to 28 gigs of ram.

Additionally an LLM requires a huge number of linear algebra operations (matrix and vector multiplications) so you may want to use a GPU based on your latency requirements and use case. However the current largest consumer GPUs (Nvidia 4090) has 24 gigs of ram which means that even there you'll need to do some engineering tricks and tradeoffs. If you want to run on a commercial GPU service you can get GPUs with 40 and 80 gigs of ram for a price. Though they can be hard to provision at times.

Consequently you'll need to balance

- Accuracy, quality and features

- SLA - latency, throughput, availability

- Memory & compute constraints

- Privacy & security requirements

- Cost - unit economics

Shrinking an LLM

Many companies and organizations are working on getting models to run on smaller, less expensive and available hardware. Their products will automate much of work but some of the engineering tricks you can use manually today include:

Sparsification - the parameters are large matrices of numbers. Many of those numbers are zero or close to zero. The theory is that anything multiplied by zero or a very small number does not contribute much to final answer. Therefore it should be possible to clamp anything close to zero to zero and use sparse matrix techniques to reduce the amount of data that needs to be stored without affecting the output too much.

Quantization - as mentioned above the parameters are typically 32 bit (4 byte) floating point numbers. Quantization attempts to do the same operations in 16 or 8 bit machine learning specific floating point format. Thereby cutting the amount of memory required in half or by 4. Again, this is done in a way that minimizes the affect on the answer.

Palletization - The next step in quantization (sometimes also called quantization or palletization) is to use 2 or 4 bit integers to index into a table of commonly occurring values. Much like a GIF has limited colors compared to a PNG but is still useful. And of course the trick is to do it in a way that minimizes the affect on the output. Whether this affect is too detrimental for a particular application is use case specific.

Advanced optimization - Onnx, CoreML and other (sometimes hardware specific) runtimes attempt to optimize the computation graph by fusing, reordering and otherwise optimizing operations. This is great when its possible and may not affect the output but is often hardware specific so different techniques will have to be applied based on the platform you are targeting.

Useful tools and services

HuggingFace.co has become the goto place for machine learning models in general and LLMs in particular. They operate a model and dataset hub where you can download open source models and data. They create software that has become critical to running and fine tuning models. They have some great tutorials and finally they provide hosting of your models if you want to run a custom model but not host it yourself.

The software is primarily in Python and includes the Transformers library which lets you run LLMs "locally", Diffusors which does the same for generative image models, Accelerate for multi-gpu & mixed precision, and PEFT - Parameter Efficient Fine Tuning for fine tuning models. Additionally you'll find that the Bits and Bytes library is extremely helpful for model quantization.

For example the code below runs a Vicuna 7B model on my laptop. It is fantastic that it runs but note that it takes 19s to generate a response and the response is not that impressive. This model could use some fine tuning and optimization to be useful.

import transformers

name = "TheBloke/vicuna-7B-1.1-HF"

tokenizer = transformers.AutoTokenizer.from_pretrained(name)

model = transformers.AutoModelForCausalLM.from_pretrained(name)

pipe = transformers.pipeline("text-generation", model=model,

tokenizer=tokenizer)

pipe("What is the capital of England?")

---

CPU times: user 19.1 s, sys: 98.9 ms, total: 19.2 s

Wall time: 19.2 s

[{'generated_text': 'What is the capital of England?\n\n1. London\n2. Manchester\n3. B'}]Fine Tuning an LLM

In general training from scratch would be way too expensive for a large model for most organizations. But note that if you have a large quantity of high quality corporate/private data (as opposed to mixed quality Internet data) it may be possible to train a much smaller LLM for your use case.

In many cases fine tuning may be the way to go where fine tuning is the process of continuing to train an already trained base model on what domain data is available. The idea is to freeze most of the weights and only train some OR add/replace some weights or weight updates with a more efficient structure.

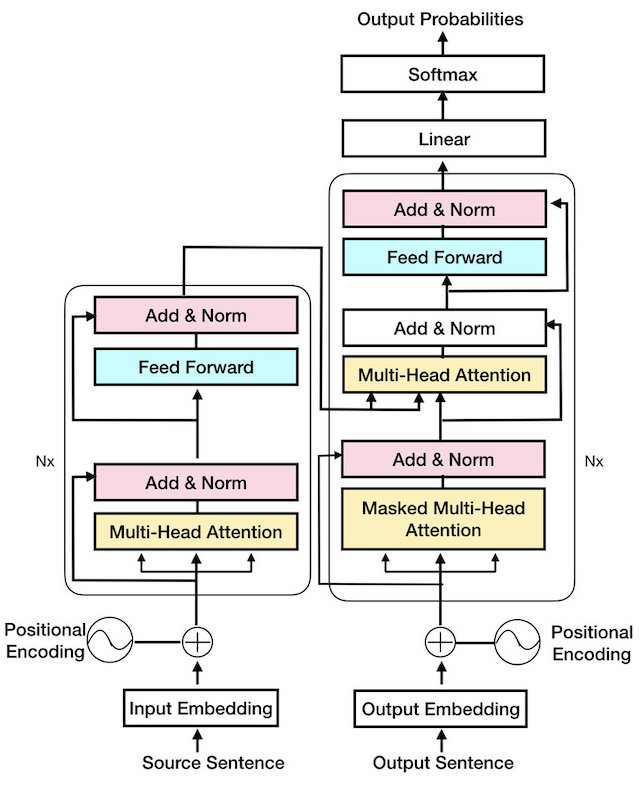

Attention is All You Need, Vaswani et al. https://arxiv.org/abs/1706.03762

Attention is All You Need, Vaswani et al. https://arxiv.org/abs/1706.03762

To do that two techniques of Parameter Efficient Fine Tuning (PEFT) are commonly used. These are

-

Low Rank Adaptation (LORA) - Injects trainable rank decomposition matrices into frozen model.

-

Quantization and Lora (QLORA) - Injects trainable rank decomposition matrices into quantized and frozen model.

And are available in HuggingFace's PEFT library. The details are a bit involved and this post is already too long so we'll cover that in a later article.

Summary

- Prompt Engineering can get you pretty far if you take it seriously.

- Fine tune if you must, there are products and services that will help, but have a budget.

- If you want to fine tune consider a smaller model on your own high quality data.

- Run on prem if necessary but support your ops department.

In my opinion most production applications will use a mix of prompt engineering, generative & discriminative ML, and traditional software engineering. There are many companies and organizations working on improving MLL ops and running a model on prem is possible but you'll need to think through your requirements carefully.

If you have any questions please get in touch.