Something Big Is Happening - Analysis

This is an analysis of Something Big Is Happening by Matt Shumer.

Executive Summary

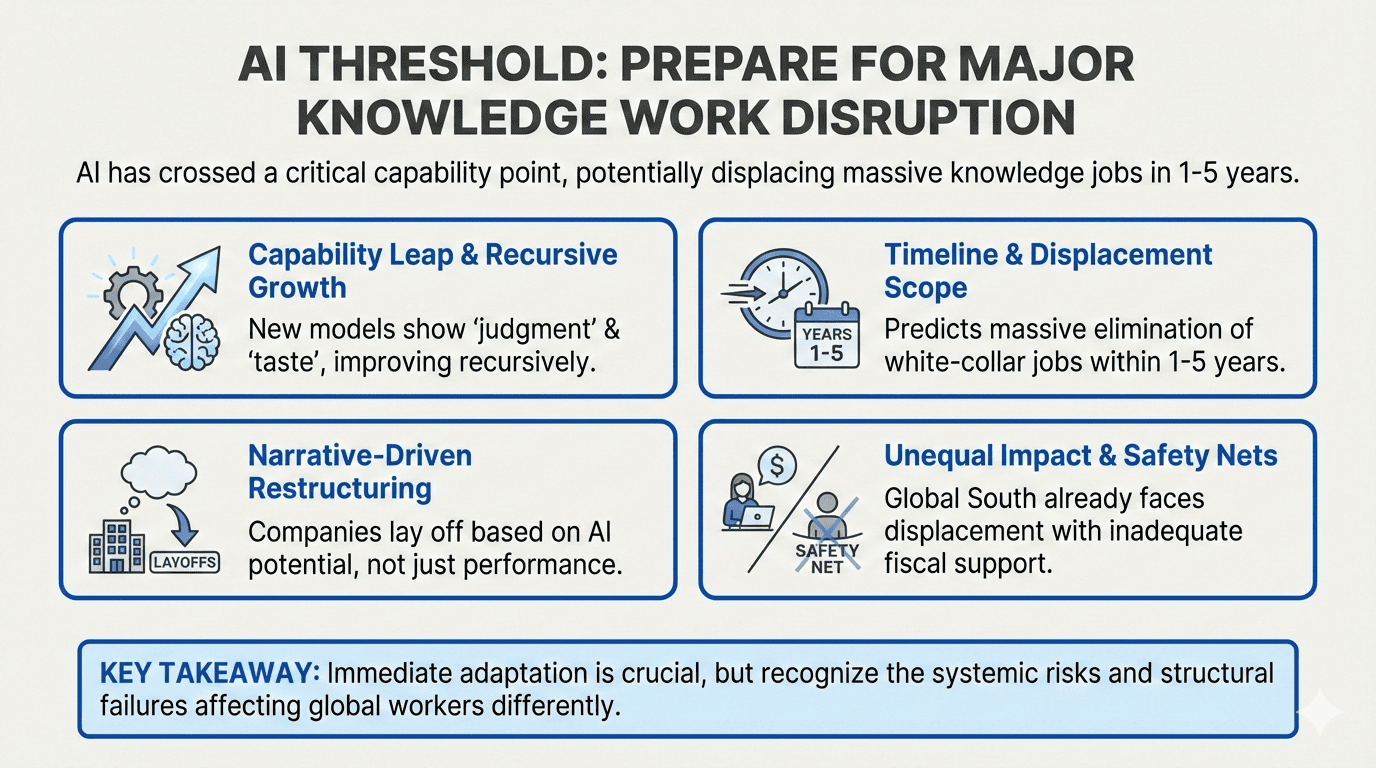

Matt Shumer’s thesis — that AI has crossed a critical capability threshold in February 2026 and will eliminate massive numbers of knowledge jobs within 1-5 years — survives multi-stakeholder scrutiny in direction but fails on timeline, mechanism, and scope. All seven stakeholders converge on a longer timeline of 5-7 years for major economic impact, though Global South displacement is already measurable (TCS cut 12,000 jobs while revenue grew 3%). The thesis’s most dangerous feature is not its technological prediction but its potential for self-fulfillment: HBR reported in January 2026 that companies are laying off workers “because of AI’s potential — not its performance.” The narrative of AI displacement can trigger layoffs, hiring freezes, and credit contraction independent of whether AI actually delivers the capabilities Shumer claims.

The METR RCT finding — experienced developers were 19% slower with AI while perceiving themselves 20% faster — and the broader AI Productivity Paradox (NBER: 90% of firms report no AI impact on employment or productivity) directly challenge the essay’s evidence base. However, “invisible displacement” through hiring freezes, attrition non-replacement, and contract restructuring is already underway and operates on market expectations rather than demonstrated capability. The essay’s advice to “adapt now” contains a structural paradox identified by multiple stakeholders: workers who adopt AI most eagerly may accelerate their own replaceability while the productivity surplus flows to firms, not workers.

The fiscal infrastructure to manage transition is catastrophically underfunded: $41 per exposed worker in the US, $44/worker in India, and $14.70/worker in the Philippines. The essay is entirely blind to the Global South, where 5.67 million Indian IT workers and 1.7 million Philippine BPO workers face displacement with no meaningful safety nets. The strongest surviving version of the thesis: AI disruption is real and directionally correct, but its primary mechanism is narrative-driven market restructuring — not the technological inevitability the essay claims — and the timeline is roughly double what Shumer projects.

Thesis

-

Primary thesis: AI capability has crossed a critical threshold in early 2026 — it can now perform most cognitive/knowledge work better than humans, improve itself recursively, and will eliminate a massive portion of white-collar jobs within 1-5 years. People must start adapting immediately or be caught unprepared.

-

Secondary claims:

- AI models released on February 5, 2026 (GPT-5.3 Codex, Opus 4.6) represent a qualitative leap, demonstrating “judgment” and “taste”

- The public perception gap is dangerous — most people are evaluating AI based on outdated 2023-2024 experiences

- AI is now meaningfully contributing to building itself, initiating a recursive intelligence feedback loop

- 50% of entry-level white-collar jobs will be eliminated within 1-5 years (per Amodei’s prediction, which the author considers conservative)

- No cognitive work done “on a screen” is safe in the medium term

- The biggest advantage individuals can have is being early adopters who adapt now

- The technology carries existential-scale risks alongside transformative upside

-

Implied goal: Readers should urgently adopt AI tools, restructure their financial and career planning, and begin adapting to a world where AI displaces most knowledge work — treating this as a personal emergency comparable to early COVID awareness.

-

Interpretation notes: The essay is written by an AI startup CEO and investor, creating an undisclosed conflict of interest. The essay functions simultaneously as a genuine warning and as what the AI Skeptics identified as a “capital-raising environment” — the urgency narrative serves the $89.4B AI VC ecosystem in which the author operates. This dual function does not invalidate the warning but requires readers to discount claims about timeline and severity.

Conflict Mapping

Direct Conflicts

-

AI Skeptics vs. AI Lab Leadership on capability claims: The Skeptics’ strongest evidence (METR RCT: developers 19% slower) directly contradicts the Labs’ claim that AI writes “90% of code” internally. The Labs counter that the METR study used early-2025 models on expert-familiar codebases — the hardest context for AI to add value. This conflict is empirically resolvable: a replication with February 2026 models would settle it. Until that study exists, the disagreement persists.

-

Enterprise Buyers vs. the thesis on deployment speed: Enterprise reality (95% pilot failure, 56% CEOs getting “nothing,” 63.7% with no formalized AI initiative) fundamentally contradicts the essay’s claim that AI is already displacing workers at scale. The Enterprise Buyers demonstrate that capability ≠ deployability. However, they concede that displacement is already occurring through “invisible mechanisms” — hiring freezes and contract restructuring — that bypass the enterprise adoption funnel entirely.

-

Mid-Career Workers vs. the essay’s “adapt now” advice: The productivity surplus capture trap means early AI adoption may accelerate, not prevent, worker displacement. The essay frames adaptation as individual survival strategy; the Mid-Career Workers reveal it as a collective action problem where individually rational behavior is collectively catastrophic (a prisoner’s dilemma).

-

Global South vs. the essay’s scope: The essay addresses only Western, upper-middle-class knowledge workers. For the 5.67 million Indian IT workers and 1.7 million Philippine BPO workers whose livelihoods depend on cognitive labor arbitrage, the essay’s advice is “structurally incoherent” — it assumes organizational agency that outsourcing models are specifically designed to eliminate.

Alignment Clusters

-

Skeptics + Enterprise Buyers: Both argue adoption friction extends the timeline well beyond 1-5 years. The Enterprise Buyers provide the empirical grounding (pilot failure rates, organizational resistance) that the Skeptics’ historical analogies lack.

-

Policymakers + Global South: Both identify catastrophically inadequate institutional responses. The fiscal death spiral (US) and the gesture-scale reskilling (Global South) are variants of the same structural failure: policy apparatus designed for gradual, sector-specific transitions facing simultaneous, cross-sector disruption.

-

Mid-Career Workers + Parents: Both are downstream absorbers of AI Lab externalities with limited agency. Both face the paradox that the essay’s advice (adapt individually) may be counterproductive (accelerates replaceability or creates irrecoverable educational bets).

Natural Coalitions

-

Policymakers + Global South + Mid-Career Workers: A labor protection coalition focused on transition funding, licensing as a deployment brake, and collective bargaining for AI adoption terms.

-

Enterprise Buyers + Skeptics: An evidence-based adoption coalition that could resist narrative-driven restructuring if grounded in actual deployment data rather than capability demonstrations.

-

Labs + Parents (unlikely but structurally possible): Labs’ interest in long-term societal acceptance aligns with Parents’ interest in predictable educational pathways, but only if labs accept responsibility for transition externalities they currently externalize.

Irreconcilable Tensions

-

Lab revenue growth vs. safety team departures: Labs cannot simultaneously maximize deployment speed (revenue) and ensure responsible deployment (safety). The departure of Anthropic’s safeguards lead and OpenAI’s safety executives demonstrates this tension is already breaking in favor of revenue.

-

Individual adaptation vs. collective action: The essay’s individualist framing (“be the person who adapts”) structurally undermines the collective responses (policy advocacy, organized labor, coordinated employer demands) that would actually improve outcomes for the median worker.

-

Global South displacement vs. Western adoption friction: Displacement is accelerating in low-regulation, cost-arbitrage economies precisely because the adoption friction that protects Western workers does not exist there. Western workers’ protection comes at the cost of Global South workers bearing disproportionate harm first.

Debate Highlights

1. The METR RCT Controversy

AI Skeptics challenged the entire panel with the METR Randomized Controlled Trial finding: experienced developers were 19% slower with AI while perceiving themselves 20% faster. This was the most contested evidence in the debate.

- What was at stake: Whether the essay’s core evidence — personal productivity anecdotes — is contaminated by a systematic perception bias that makes AI tools feel effective when they are not.

- What it revealed: The Labs and Mid-Career Workers both identified critical scope limitations — the study used early-2025 models on expert-familiar codebases, testing the scenario where human expertise has maximum advantage. The Global South made the sharpest counter: “The decision-makers in our industry do not care about actual productivity gains. They care about perceived productivity gains and cost reduction.” The METR finding may prove that AI does not help experienced developers while simultaneously being irrelevant to the displacement of routine knowledge workers.

- Outcome: No stakeholder dismissed the finding outright. The Labs offered a testable prediction: replication with February 2026 models will show improvement. The Skeptics strengthened their position by adding the Solow Paradox framing (NBER: 90% of firms no impact). The debate remains empirically open — and critically important.

2. The Narrative-Driven Displacement Discovery

Enterprise Buyers and Mid-Career Workers independently converged on a mechanism that the essay does not describe: displacement driven by the narrative of AI capability rather than demonstrated capability.

- What was at stake: Whether AI needs to actually work in order to displace workers.

- What it revealed: HBR’s January 2026 finding that “companies are laying off workers because of AI’s potential — not its performance” transforms the thesis from a technological prediction into a self-fulfilling prophecy. The Enterprise Buyers identified a feedback loop: AI labs demonstrate capabilities → CEOs announce restructuring → markets reprice → banks tighten lending → contraction validates the fear. This loop can generate a genuine economic crisis even if AI never actually replaces 20% of knowledge workers.

- Outcome: This was the debate’s most important emergent finding — visible only through cross-stakeholder analysis, invisible from any single perspective. It means the Skeptics can be right about AI capability being overstated while the thesis still triggers the predicted outcomes through narrative momentum.

3. The Global South as Canary in the Coal Mine

Workers in the Global South challenged the entire panel’s timeline assumptions with present-tense displacement data.

- What was at stake: Whether AI displacement is a future prediction or a current reality.

- What it revealed: TCS cutting 12,000 workers while revenue grew 3%, India’s top five firms showing seven consecutive quarters of negative net employee addition, and the ILO’s 89% high-risk assessment for Philippine BPO workers constitute the strongest empirical evidence of actual AI-driven displacement — but in economies the essay ignores entirely. The Skeptics conceded their “no discernible disruption” claim is “geographically parochial” — it holds for US aggregate data but fails globally. The Global South also identified that even if AI underperforms, the narrative drives contract restructuring: “300+ contracts facing 30-40% pricing pressure” based on AI expectations, not demonstrated AI performance.

- Outcome: Multiple stakeholders updated their positions. The Skeptics acknowledged a “dual-track” assessment: displacement in BPO is real and current; Western knowledge work is overstated on 1-5 year timelines. The Labs conceded they have “no credible plan to address Global South displacement.”

4. The Asymmetric Risk for Parents

Parents challenged the Skeptics on the practical implications of their analytical rigor.

- What was at stake: Whether intellectual skepticism about AI timelines is a responsible framework for irreversible parental decisions.

- What it revealed: Parents identified that the Skeptics’ longer timeline (3-10 years) actually worsens their situation by shifting disruption to overlap with their children’s degree programs. A child entering high school in 2026 graduates college in 2034 — squarely within even the Skeptics’ disruption window. The Skeptics’ analytical position, while rigorous, offers “no constructive guidance for parents operating under uncertainty.” The Parents’ own data was devastating: 97% of parents fear AI career disruption, entry-level job postings dropped 35% from 2023-2025, only 7% of new hires in 2024 were recent graduates. CS enrollment — the “safe” AI-adjacent major — declined 6-15% in 2025.

- Outcome: The Parents emerged with the strongest “hedge, don’t pivot” framework: maintain conventional credentials while supplementing with AI literacy, and watch specific falsifiers (entry-level hiring ratios by 2028, college wage premium by 2029) before making irreversible pivots.

5. The AI Financial Sustainability Question

Skeptics and Mid-Career Workers jointly raised whether the AI industry’s financial structure can sustain the disruption trajectory.

- What was at stake: Whether the tools people are being told to build their careers around will exist in stable form in 3-5 years.

- What it revealed: OpenAI projects $14B in losses for 2026, $44B cumulative through 2028, with profitability not expected until 2029-2030. The Skeptics identified an internal contradiction in the Labs’ position: “If your realistic assessment (3-10 years) is correct, the $380B valuation is a bubble. If the valuation is rational, your realistic assessment is wrong. You cannot hold both simultaneously.” The Labs conceded the vulnerability but compared to early Amazon (losses for seven years before profitability). The Mid-Career Workers identified a perverse dynamic: workers adopt AI tools → growth metrics help labs raise capital → labs continue subsidizing below-cost prices → if the capital cycle breaks, workers who built workflows around AI tools are stranded.

- Outcome: This remains genuinely unresolved. A capital contraction would paradoxically help workers by slowing displacement, but would harm anyone who invested in AI-first career pivots.

Key Falsifiers

Consolidated from all stakeholder analyses. Grouped by what they test.

Thesis Viability (Does AI Actually Displace Workers?)

-

METR RCT replication with February 2026 models: If a comparable RCT with GPT-5.3 Codex or Opus 4.6 shows 20%+ speedup for experienced developers, the productivity paradox may be model-generation-specific. If the slowdown persists, the essay’s capability claims rest on anecdote rather than controlled evidence. (Identified by: AI Skeptics, AI Lab Leadership. Timeline: mid-2027.)

-

METR task duration acceleration: If AI task completion horizons continue doubling every ~4 months past multi-day thresholds by Q1 2027, the recursive improvement claim gains strong empirical support. If the doubling time decelerates significantly, the improvement trajectory is sub-exponential. (Identified by: Parents, AI Lab Leadership. Timeline: Q1 2027.)

-

BLS knowledge-worker employment declining 5%+: Direct macro-level confirmation of displacement at scale. (Identified by: AI Skeptics. Timeline: 12-24 months.)

Implementation Feasibility (Does Enterprise Adoption Scale?)

-

Fortune 500 workforce reduction >30% via AI within 3 years: The strongest test of whether capability translates to organizational transformation. (Identified by: Enterprise Buyers. Currently considered unlikely by the stakeholder who proposed it.)

-

Enterprise pilot success rate improvement: If pilot success rates increase from ~5% to ~20%+ within 18 months, the deployment gap is closing. If they remain flat, the adoption paradox persists. (Identified by: Enterprise Buyers, AI Lab Leadership.)

-

AI-native startup competitive displacement: If new AI-native firms begin capturing significant market share from traditional firms in law, finance, or consulting within 2 years, the displacement pathway bypasses enterprise adoption entirely. (Identified by: Mid-Career Workers.)

Stakeholder-Specific Impact

-

Entry-level hiring ratios by sector (2027-2028): If entry-level-to-total hiring ratios decline >25% in AI-exposed occupations, the expertise depreciation spiral is confirmed. This is an earlier indicator than aggregate employment data. (Identified by: Workforce Policymakers, Mid-Career Workers, Parents.)

-

Mid-career compensation stability through 2029: If median compensation in AI-exposed professions holds within 10% of 2025 levels, the surplus capture trap is manageable. If it declines more sharply, workers are bearing the cost. (Identified by: Mid-Career Workers.)

-

BPO revenue decline in India/Philippines >15%: If BPO revenue from these countries declines while AI services revenue from the same clients grows proportionally, the complementarity paradox is confirmed. (Identified by: Workers in the Global South.)

-

College wage premium erosion by 2029: If the premium for a bachelor’s degree in AI-exposed fields declines significantly, the traditional educational playbook is failing. (Identified by: Parents.)

Financial System Stability

-

Consumer credit delinquency spike in knowledge-worker zip codes: Currently, mortgage delinquency is concentrated in lower-income areas. If it spreads to higher-income professional zip codes, the credit transmission mechanism is activating. (Identified by: Multiple stakeholders.)

-

OpenAI/Anthropic financial trajectory: If either major lab fails to reach profitability by 2029 and requires emergency capital raises, the AI tool ecosystem may contract, simultaneously slowing displacement and stranding AI-dependent workflows. (Identified by: AI Skeptics, Mid-Career Workers.)

Assumptions Audit

Critical assumptions the thesis depends on, rated by plausibility.

| # | Assumption | Plausibility | Supporting Evidence | Undermining Evidence |

|---|---|---|---|---|

| 1 | AI capability continues improving exponentially | Moderate | METR task horizons doubling every ~4 months; GPT-5.3 self-building claim; lab revenue growth ($14B ARR Anthropic) | METR RCT slowdown; pre-training scaling may be plateauing; post-training gains less proven at scale |

| 2 | Capability translates rapidly to deployment | Weak | Individual productivity demos; some firm-level cuts (Klarna -40%, McKinsey 5,000) | 95% pilot failure; 90% firms no impact (NBER); 63.7% with no formalized initiative; Solow Paradox |

| 3 | Displacement occurs through direct replacement | Weak | Some role eliminations (Salesforce 4,000 support roles) | Primary mechanism is invisible: hiring freezes, attrition, contract restructuring. HBR: layoffs based on “potential, not performance” |

| 4 | Workers can meaningfully “adapt” through adoption | Weak-Moderate | Early adopters gain temporary visibility advantage; some productivity gains for routine tasks (Stanford: 14% for customer service) | Productivity surplus capture trap; METR perception gap; adoption may accelerate replaceability; AI tool ecosystem financially unstable |

| 5 | The recursive improvement loop is accelerating | Moderate | GPT-5.3 Codex self-building claim; Amodei “gathering steam” statements; METR task horizon data | Gap between “improves specific algorithms in sandboxed environments” and “autonomously improves general intelligence” is large; incentive to overstate |

| 6 | 50% entry-level elimination within 1-5 years | Weak | Entry-level hiring already declining 35% (2023-2025); individual AI capabilities in legal, financial, medical analysis | Enterprise adoption friction; regulatory barriers; organizational inertia; Adoption Paradox Funnel |

| 7 | AI’s impact is historically unprecedented | Moderate | Generality of the technology (unlike blockchain/metaverse); breadth across coding, writing, analysis | Every GPT has had this claim; internet and electricity also had long periods of overhyped expectations before eventual transformation |

| 8 | The essay’s advice is accessible to all affected workers | Weak | $20/month subscription is accessible for Western professionals | Structurally inaccessible to Global South workers; requires organizational agency that outsourcing eliminates; assumes stable AI tool ecosystem |

System Stability

Reinforcing Loops

-

Narrative-Displacement Loop (most dangerous): AI labs demonstrate capabilities → media amplifies urgency → executives announce restructuring → markets reprice → layoffs occur → narrative validated → more urgency. This loop can operate independently of actual AI capability.

-

Capability-Investment Loop: AI capabilities attract VC ($89.4B in 2025) → capital funds more compute and talent → capabilities improve → more investment. Self-reinforcing until capital patience breaks or returns fail to materialize.

-

Expertise Depreciation Spiral: Firms cut entry-level positions → junior talent pipeline hollows out → no mid-career professionals developing → senior expertise becomes unreplaceable → temporary scarcity value for current seniors → but long-term institutional knowledge erodes.

-

Global South Pricing Death Spiral: AI enables cost reduction claims → clients demand contract renegotiation (30-40% pressure) → BPO firms cut headcount or margins → reduced revenue funds less reskilling → workers become less competitive → more displacement.

-

AI-Fluent Family Advantage Loop: Affluent families adopt AI tools → children gain AI literacy advantage → educational and career outcomes diverge → inequality compounds across generations and across nations.

Countervailing Forces

-

Enterprise Adoption Friction: Organizational complexity, change management resistance, data quality issues, compliance requirements, and liability concerns collectively slow deployment far beyond the essay’s timeline. The 95% pilot failure rate is the strongest current brake.

-

Regulatory and Licensing Moats: Licensed professions (law, medicine, accounting) have built-in friction — someone must sign off, take legal responsibility, stand in a courtroom. This does not prevent displacement but slows it and preserves senior positions while eliminating junior ones.

-

AI Financial Sustainability Constraint: The AI industry is burning cash at extraordinary rates ($14B projected loss for OpenAI in 2026). If capital patience breaks before profitability arrives, the capability trajectory stalls and the narrative loop weakens.

-

Political Backlash: Mass visible displacement would generate populist regulation (automation taxes, mandatory human oversight, AI deployment restrictions). This is the strongest systemic brake but activates only after significant harm has occurred.

-

Productivity Paradox Friction: Individual AI productivity gains are absorbed by downstream bottlenecks (review time +91%, bugs +9%, PR size +154% per Faros data). Organizational productivity may not improve even as individual task speed increases.

Unrealistic Cooperation Requirements

- The essay assumes workers and firms share aligned interests in AI adoption. They do not. Firms capture surplus; workers bear displacement risk.

- The essay assumes a functioning policy apparatus will manage transition. Current funding is $41/exposed worker — orders of magnitude below necessity.

- The essay assumes AI tool ecosystems will remain stable and accessible. They are subsidized, loss-making, and concentrated in three providers.

Scaling Fragility

- What works at the individual level (one developer describing tasks to AI) breaks at organizational scale (integration, review, quality assurance, compliance, coordination).

- What works in low-regulation environments (Global South BPO) faces binding constraints in high-regulation ones (Western professional services).

- What works in capability demonstrations breaks in production environments where error costs are high and accountability is required.

Top Fragilities

Ranked by severity:

-

Narrative-Driven Displacement Independent of Capability (severity: 9/10, category: coordination_failure) HBR documents companies laying off workers based on AI’s potential, not its performance. The thesis can trigger its own predicted outcomes through executive belief and market momentum. Workers can be displaced by a prediction that turns out to be wrong, with no mechanism to reverse the harm once realized.

-

Fiscal Death Spiral (severity: 8/10, category: constraint_bottleneck) AI displacement erodes payroll tax revenue that funds transition programs. At $41/exposed worker in the US and $14.70/worker in the Philippines, transition infrastructure is already at gesture scale. The more displacement occurs, the less fiscal capacity exists to manage it. This is architecturally embedded in current tax systems, not a policy oversight.

-

Expertise Depreciation Spiral (severity: 8/10, category: assumption_failure) Cutting entry-level hiring now (already down 35%) destroys the senior talent pipeline of 2035. Entry-level roles serve as apprenticeships that build the judgment AI cannot yet replicate. By the time organizations discover they need mid-career professionals, the pipeline will have been dry for a decade. This damage is invisible in current metrics and irreversible once it compounds.

-

Global South Displacement Without Safety Nets (severity: 8/10, category: external_shock) 5.67 million Indian IT workers and 1.7 million Philippine BPO workers face displacement with reskilling investment at 1% of what is needed, no unemployment insurance, and no regulatory leverage over the AI labs driving their displacement. The “complementarity paradox” means AI augmentation eliminates the cost advantage that sustains entire national economies, not just individual jobs.

-

AI Industry Financial Instability (severity: 7/10, category: assumption_failure) OpenAI projects $44B in cumulative losses through 2028. The tools workers are told to build careers around may not exist in stable form on the relevant timescale. A capital crisis would paradoxically slow displacement but would also strand anyone who invested in AI-first career pivots and destabilize enterprise workflows built around AI providers.

-

Perception-Reality Gap in Productivity (severity: 7/10, category: assumption_failure) METR RCT: 19% slower, perceiving 20% faster. NBER: 90% of firms no impact. If AI productivity gains are largely perceptual, the entire adaptation framework collapses — workers are investing time in tools that do not make them more productive while believing they do. Enterprises are restructuring based on expected gains that may never materialize.

-

Timeline Compression Error (severity: 6/10, category: assumption_failure) The essay claims 1-5 years. Seven stakeholders converge on 5-7+ years. If the essay’s timeline is wrong by a factor of 2-3x, the advice to treat this as a “personal emergency” causes unnecessary financial and psychological harm — while the advice to monitor and hedge would have produced better outcomes.

Conditional Thesis

-

Surviving thesis: AI capability is advancing rapidly and will transform knowledge work substantially over the next 5-10 years. The disruption is real but proceeding primarily through invisible mechanisms (hiring freezes, attrition, contract restructuring, pricing pressure) rather than the dramatic replacement the essay describes. The narrative of AI displacement is itself a causal force — capable of triggering layoffs and market restructuring independent of demonstrated AI capability. Early engagement with AI tools is prudent but should be framed as professional literacy, not survival strategy. Institutional and collective responses are more important than individual adaptation.

-

Conditions that must hold:

- AI capability continues improving (even at a decelerating rate)

- Enterprise adoption friction does not increase dramatically (via regulation or capital crisis)

- The AI industry remains financially viable long enough to mature deployment patterns

- Political backlash does not produce prohibition-level regulation before the technology demonstrates broad economic value

Unresolvable Tradeoffs

| Tradeoff | Who Loses | Normative Commitment Required |

|---|---|---|

| Speed of AI deployment vs. worker transition time | Workers in all categories, especially Global South | Society must decide whether productivity gains justify displacement faster than institutions can absorb |

| Individual adaptation vs. collective bargaining power | Workers who adopt individually accelerate displacement for the group | Choosing individual adaptation means accepting that aggregate worker outcomes will worsen |

| Lab revenue growth vs. safety/governance capacity | Everyone, if safety capacity degrades faster than capability grows | Society must decide whether to regulate deployment speed at the cost of competitive position |

| Global South cost savings vs. Global South employment | Global South workers and the national economies dependent on outsourcing | Western firms must decide whether cost optimization via AI justifies destabilizing developing economies |

| Protecting current educational pathways vs. preparing for disrupted ones | Parents and children making irreversible educational investments | Families must make bets under radical uncertainty with no institutional guidance adequate to the moment |

| Transparency about AI capabilities vs. market stability | Either AI labs (if forced to temper claims) or financial markets (if claims trigger panic) | Society must decide how much uncertainty markets can absorb without destabilizing |

Decision Guidance

Recommended Action

For individuals: Engage seriously with AI tools as professional literacy ($20/month, one hour daily), but do not make irreversible career or financial pivots based on the essay’s 1-5 year timeline. Maintain conventional credentials and professional networks. Build financial resilience (reduce debt, increase savings, diversify income). Monitor the falsifiers listed above, particularly entry-level hiring ratios and METR replication studies.

For organizations: Pursue evidence-based AI adoption — measure actual productivity impact rigorously before restructuring. Resist narrative-driven headcount targets. Preserve apprenticeship pipelines (entry-level hiring) even if AI handles some junior tasks. The 95% pilot failure rate argues for caution, not acceleration.

For policymakers: Treat this as a 3-7 year preparation window, not a 1-5 year emergency, but begin building fiscal infrastructure now — the window closes faster than legislative cycles can respond. Prioritize: (1) entry-level hiring monitoring systems as early warning indicators, (2) payroll tax diversification to prevent the fiscal death spiral, (3) occupational licensing review to preserve apprenticeship functions, (4) international coordination on Global South transition support.

For parents: Hedge within the credential playbook — do not abandon conventional education but supplement with AI literacy. Favor fields with licensed accountability (law, medicine) over pure knowledge work (consulting, analysis). Watch entry-level hiring data by 2028 before making major educational pivots. The counter-cyclical opportunity is real: if enterprises overcorrect and then discover AI cannot replace junior workers, credentialed graduates will face reduced competition.

Conditions Under Which This Holds

- AI capability continues improving but the Productivity Paradox (individual gains ≠ organizational gains) persists for 2-3+ years

- Enterprise adoption remains friction-laden (pilot success rates below 20%)

- The AI industry does not experience a catastrophic capital crisis

- Political backlash does not produce either prohibition-level regulation or total deregulation

If Those Conditions Fail

- If METR replication shows 30%+ speedup AND enterprise pilot success rates reach 30%+ → the essay’s timeline may be correct; shift from hedging to active restructuring

- If OpenAI/Anthropic face capital crisis and AI tools degrade → displacement slows but AI-dependent workflows are stranded; revert to non-AI professional strategies

- If the narrative-displacement loop triggers a financial crisis before AI matures → the predicted outcomes arrive without the predicted benefits; focus shifts from adaptation to crisis management and political advocacy

- If recursive improvement accelerates past METR multi-day thresholds by Q1 2027 → all timeline estimates are invalid; the correct response becomes financial and political, not educational or career-based

Falsifier Watchlist

Monitor these indicators — if any trigger, revisit this guidance:

- METR RCT replication with Feb 2026 models showing 20%+ speedup (validates capability claims)

- Entry-level hiring ratios declining >25% in AI-exposed occupations by 2028 (confirms expertise depreciation spiral)

- BPO revenue from India/Philippines declining >15% with proportional AI services growth (confirms Global South displacement at scale)

- Consumer credit delinquency spreading to higher-income professional zip codes (confirms credit transmission mechanism)

- METR task duration accelerating past multi-day thresholds by Q1 2027 (validates recursive improvement claims)

- Fortune 500 achieving >15% workforce reduction through AI within 2 years (confirms enterprise deployment at scale)

Blind Spots & Limitations

Stakeholder Categories Not Represented

- Competitors or adversaries (Category 5): No stakeholder represented firms or nations competing to lead in AI development. The geopolitical competition dimension (US-China AI race, EU regulatory response) was discussed only tangentially. This dimension could fundamentally alter deployment timelines and regulatory constraints.

- Capital providers/investors (Category 6): While the AI Skeptics identified the $89.4B VC environment and the Labs discussed valuations, no dedicated investor stakeholder was included. Investor behavior (capital patience, funding cycles) is a critical variable in AI industry sustainability.

Data or Context Gaps

- No controlled productivity studies with February 2026 models: The central debate about AI capability rests on a study using early-2025 models. The most important empirical question in this analysis — whether the February 2026 models represent a genuine qualitative jump — cannot be answered with available data.

- Limited firm-level deployment data: Aggregate statistics (95% pilot failure, 56% getting “nothing”) obscure significant variation by sector, firm size, and use case. The debate would benefit from sector-specific adoption data.

- No longitudinal data on AI-augmented worker retention: The key falsifier (do firms retain AI-implementing workers or lay them off within 18 months?) has not been studied. Only preliminary, anecdotal evidence exists.

Ungrounded Claims

- The essay’s claim that AI demonstrates “judgment” and “taste” is a subjective assessment from a single user (the author) with financial incentives to promote this interpretation. No independent evaluation of these qualities exists.

- The recursive improvement claim (“AI helped build itself”) is based on OpenAI’s self-reported technical documentation. No independent verification or quantification of AI’s contribution to its own development has been published.

- Several stakeholders’ web search findings (specific statistics on layoffs, hiring rates, financial projections) were used as reported without independent verification within this analysis.

Scope Boundaries

- This analysis focused on knowledge work displacement and did not examine AI’s impact on physical labor, creative work, or scientific research — all of which the essay discusses.

- The existential risk dimension (AI deception, biological weapons, surveillance states) was largely excluded to focus on the workforce impact thesis.

- The analysis did not model specific financial market scenarios (credit crisis mechanics, banking system stress tests) that would be needed to evaluate the essay’s most extreme predictions.

- The positive potential of AI (medical breakthroughs, educational access, democratized creation) was acknowledged by several stakeholders but not deeply analyzed, as the essay’s primary intervention is about disruption risk.

Appendix: Stakeholder Cards

Mid-Career Knowledge Workers

Category: Directly harmed | Impact: 9/10

Identity & Role

White-collar professionals in law, finance, accounting, and consulting with 10-20 years of experience, established careers, mortgages, and families who face the most acute disruption risk. They represent the demographic most directly addressed by the essay — and most harmed by its advice if followed uncritically.

Thesis Interpretation

The thesis is experienced as a personal threat wrapped in empowerment rhetoric. The essay tells them to “be early” and “adapt” while the structural dynamics ensure that adaptation accelerates replaceability.

Steelman Argument

The essay is directionally correct that AI capability is advancing rapidly and that early engagement provides temporary advantage. Workers who understand AI tools can position themselves as “orchestrators” of AI-augmented workflows, capturing value during the transition period. The alternative — ignoring AI — guarantees marginalization.

Toulmin Breakdown

- Claim: Mid-career knowledge workers face acute near-term disruption that individual adaptation alone cannot solve.

- Grounds: Productivity surplus capture trap; HBR finding on potential-driven layoffs; entry-level hiring decline of 35%; McKinsey deploying 12,000 AI agents with 5,000 human role cuts.

- Warrant: Historical precedent shows productivity gains from automation accrue to capital, not labor (wage-productivity decoupling since 1979).

- Backing: METR RCT perception gap; NBER 90% no-impact finding; Stanford GSB 14% productivity gain concentrated in less-experienced workers.

- Qualifier: Effect varies sharply by profession — unregulated knowledge work (2-4 year disruption window) vs. regulated professions (5-8 years). Licensed accountability provides meaningful but temporary protection.

- Rebuttals: (1) If AI capability plateaus, the expertise depreciation spiral reverses and experienced workers regain value. (2) If the Gartner Trough of Disillusionment arrives in 2-3 years, there may be a counter-cyclical hiring boom.

Gains & Losses

- Gains if thesis correct: Temporary scarcity value for AI-proficient mid-career workers; potential reshoring of work from Global South.

- Losses if thesis correct: Income erosion, career disruption, mortgage stress, professional identity crisis. Productivity gains captured by firms, not workers.

Key Constraints

Career lock-in (mortgage, family obligations, specialized expertise), retraining costs, professional identity tied to current role, collective action deficit in individualistic professional cultures.

Feedback Loops

Productivity surplus capture → firm headcount reduction → market narrative validation → more cuts. Expertise depreciation spiral: cutting juniors now → no senior pipeline by 2035.

Likely Actions

Cautious AI adoption with internal advocacy; financial de-risking (savings, debt reduction); professional network diversification. Collective action probability: <5% but high expected value if it occurred.

Falsifiers

Whether firms retain AI-implementing workers or lay them off within 18 months. Mid-career compensation stability through 2029. METR replication with February 2026 models.

Final Confidence: 0.74

AI Lab Leadership

Category: Direct beneficiaries | Impact: 10/10

Identity & Role

The “few hundred researchers at a handful of companies” (OpenAI, Anthropic, Google DeepMind) shaping AI’s trajectory. They benefit from urgency narratives that justify funding, talent acquisition, and policy influence. Simultaneously the most informed and most conflicted actors.

Thesis Interpretation

The thesis is “broadly correct but strategically understated on risks.” The capability claims are directionally accurate but the timeline is compressed. The recursive improvement claim is real but its pace is overstated. The essay serves the labs’ interests by promoting urgency while externalizing transition costs.

Steelman Argument

The capabilities are real and verified by revenue ($14B ARR Anthropic, $12B+ ARR OpenAI). The recursive improvement is documented (GPT-5.3 self-building). The direction of change is not in question. The disagreement is about speed of translation to economic impact, and there the labs’ own assessment (3-10 years) is more conservative than the essay’s (1-5 years).

Toulmin Breakdown

- Claim: The thesis is directionally correct but temporally compressed and systemically underspecified.

- Grounds: Revenue growth validates capability; safety team departures validate risk; enterprise adoption data validates deployment friction.

- Warrant: Lab insiders have the best information about capability trajectories but the worst incentive structure for honest communication about timelines.

- Backing: METR task horizons doubling; GPT-5.3 self-building claim; $89.4B VC ecosystem.

- Qualifier: The 3-10 year realistic window is wide enough to be nearly unfalsifiable.

- Rebuttals: (1) The $380B valuation implies expectations inconsistent with the 3-10 year window — either the valuation is a bubble or the timeline is wrong. (2) Safety team hemorrhaging undermines the “responsible development” framing.

Gains & Losses

Five concrete ways labs benefit from urgency: capital ($30B Series G), talent (compensation at $1.5M/employee), regulatory leverage, user adoption, equity valuations. Loss: legitimacy crisis if safety concerns materialize or capability claims disappoint.

Key Constraints

Talent retention crisis (safety researchers leaving); regulatory capture risk; financial sustainability ($14B projected loss for OpenAI); competitive pressure preventing moderation of claims.

Feedback Loops

Capability → investment → more capability (reinforcing until capital patience breaks). Urgency narrative → revenue → more urgency (self-reinforcing marketing). Safety capacity erosion → risk increase → more departures (vicious cycle).

Likely Actions

Continue accelerating deployment while lobbying for regulatory frameworks that favor incumbents. Publicly acknowledge risks while structurally incentivizing speed. Pivot toward enterprise revenue to demonstrate ROI and counter the Productivity Paradox narrative.

Falsifiers

Safety incidents causing regulatory crackdown. Recursive improvement plateauing at specific complexity thresholds. METR replication failing to show improvement with Feb 2026 models. Capital markets repricing AI stocks significantly downward.

Final Confidence: 0.72

Workforce Policymakers

Category: Regulators or rule-makers | Impact: 8/10

Identity & Role

Labor department officials, legislators, and regulatory bodies who must manage large-scale economic transitions but operate on much slower timescales than the disruption described.

Thesis Interpretation

The thesis creates a policy emergency that existing institutional frameworks are not designed to handle. The displacement mechanism (simultaneous, cross-sector, knowledge work) has no historical precedent in the policy toolkit.

Steelman Argument

The most important policy finding is the asymmetry of error costs: over-preparation wastes single-digit billions; under-preparation exposes 72 million knowledge workers to displacement with $41/worker in transition funding.

Toulmin Breakdown

- Claim: Existing policy infrastructure is structurally inadequate for the disruption the thesis describes, regardless of the exact timeline.

- Grounds: $41/exposed worker in federal funding; WIOA reauthorization stalled; legislative cycles of 2-4 years vs. disruption potentially arriving faster.

- Warrant: Historical workforce transitions (manufacturing, trade) show that delayed policy response compounds harm and is far more expensive than preemptive investment.

- Backing: Fiscal death spiral mechanism; jurisdictional fragmentation; federal-state policy conflict.

- Qualifier: If the Enterprise Buyers’ 5-10 year timeline holds, there is a preparation window — but it is short by historical standards of legislative response.

- Rebuttals: (1) If displacement occurs through hiring freezes rather than layoffs, traditional unemployment metrics will not trigger policy responses. (2) Federal policy under current administration is actively undermining state-level protections.

Gains & Losses

Successful preemptive policy would be a historic institutional achievement. Failure produces fiscal crisis, political backlash, and potential systemic instability.

Key Constraints

Political will; fiscal mechanisms tied to payroll taxes that erode with displacement; jurisdictional fragmentation; expertise drain (policy talent recruited by AI labs at premium compensation).

Feedback Loops

Fiscal death spiral: displacement → payroll tax decline → less transition funding → worse outcomes → more displacement. Policy lag loop: crisis → legislative process → implementation → by the time policy arrives, the problem has evolved.

Likely Actions

Measurement and monitoring phase (DOL AI Workforce Hub); incremental legislative proposals ($250M scale); occupational licensing as deployment brake; international coordination attempts.

Falsifiers

Unemployment claims in AI-exposed occupations exceeding 2x baseline within 12 months. Entry-level hiring ratios declining >25% (earlier indicator). Payroll tax revenue declining in AI-concentrated regions.

Final Confidence: 0.73

Parents of School-Age Children

Category: Long-term/future stakeholders | Impact: 7/10

Identity & Role

Families making educational and career-guidance decisions under radical uncertainty. They carry the weight of irreversible 4-8 year decisions (college choice, career guidance) when the ground is shifting beneath all conventional wisdom.

Thesis Interpretation

The essay’s advice to “rethink what you’re telling your kids” is emotionally compelling but practically vacuous. It identifies the problem (traditional pathways may not lead to stable employment) without offering an actionable alternative pathway. “Be builders and learners” is not a college application strategy.

Steelman Argument

The strongest argument for parental engagement: 97% of parents already fear AI career disruption. Entry-level job postings dropped 35% (2023-2025). Only 7% of 2024 new hires were recent graduates. CS enrollment declined 6-15%. The data confirms that the on-ramp is being demolished while parents are told to accelerate onto it.

Toulmin Breakdown

- Claim: Parents should hedge within the credential playbook rather than pivot away from it, while demanding institutional AI literacy and watching specific falsifiers.

- Grounds: Asymmetric risk (can’t afford to be wrong); demographic cliff (15% fewer HS grads by 2029); signal-noise ratio approaching zero; counter-cyclical opportunity if enterprises overcorrect.

- Warrant: Irreversible decisions under radical uncertainty require hedging, not betting.

- Backing: Every stakeholder agrees deployment timeline is longer than capability timeline; enterprise adoption data suggests disruption is not imminent at organizational scale.

- Qualifier: If METR task duration accelerates past multi-day thresholds by Q1 2027, hedging is insufficient — the correct response becomes financial and political.

- Rebuttals: (1) Longer Skeptics’ timeline (3-10 years) overlaps with children’s degree programs, making it worse, not better. (2) The AI-fluent family advantage loop means inaction also carries risk.

Gains & Losses

No gains from thesis being correct — only varying degrees of managed loss. Potential counter-cyclical opportunity if enterprises overcorrect and then need credentialed graduates.

Key Constraints

Educational system inertia; 4-8 year decision lag; anxiety paralysis; no institutional pathway for the essay’s recommended “human” skills (cultural intuition, leading through ambiguity).

Feedback Loops

Parental anxiety cascade: essay → anxiety → premature pivots → regret if disruption is slower → loss of trust in future guidance. AI-fluent family advantage: affluent adoption → literacy gap → inequality compounds.

Likely Actions

Hedge within credential playbook; supplement with AI literacy; diversify children’s skill development; demand school-level AI integration; monitor falsifiers before making major pivots.

Falsifiers

Entry-level hiring data by 2028. College wage premium by 2029. METR task duration trajectory by Q1 2027. University enrollment patterns in AI-exposed vs. AI-adjacent fields.

Final Confidence: 0.72

Workers in the Global South

Category: Marginalized or edge-case actors | Impact: 9/10

Identity & Role

Knowledge workers in outsourcing economies — India (5.67M IT workers), Philippines (1.7M BPO), Kenya, and similar countries — whose livelihoods depend on cognitive labor arbitrage. AI threatens to eliminate the cost advantage sustaining entire industries and national economies. The essay is completely blind to their existence.

Thesis Interpretation

The essay’s thesis may be roughly correct for one specific domain — cost-arbitrage service economies — while being dramatically premature for Western knowledge workers. The irony is devastating: the essay warns privileged Western workers about imminent disruption while ignoring the workers for whom disruption is already here.

Steelman Argument

Revenue-headcount decoupling is empirically confirmed (TCS: -12,000 jobs, +3% revenue). The complementarity paradox means AI augmentation eliminates the cost advantage that sustains entire economies, not just individual jobs. The “AI narrative is already restructuring the outsourcing market in ways that harm Global South workers, and actual AI capability will compound this harm as it materializes.”

Toulmin Breakdown

- Claim: The essay is structurally blind to the Global South, and its prescriptions are architecturally incoherent for outsourced labor.

- Grounds: Revenue-headcount decoupling; ILO 89% high-risk assessment; reskilling at gesture scale ($14.70/worker Philippines); essay’s advice requires organizational agency that outsourcing eliminates.

- Warrant: Cost-arbitrage industries are the zero-friction case — displacement arrives first where regulatory protection is weakest.

- Backing: 300+ contracts facing 30-40% pricing pressure; Sama terminating Kenyan operations; seven consecutive quarters of negative net employee addition at India’s top five firms.

- Qualifier: Some growth in higher-complexity outsourced work (GCCs, AI data annotation) may partially offset displacement in routine work — creating deepening inequality within the Global South workforce.

- Rebuttals: (1) If the AI investment bubble collapses, contract restructuring pressure may ease — but structural changes to contracts don’t reverse when hype cools. (2) The METR RCT finding is least applicable to outsourced work (routine tasks, unfamiliar codebases) — precisely the context where AI likely does add value.

Gains & Losses

Catastrophic losses: entire economic models (BPO as development pathway) threatened. No comparable gains — the essay’s “your dreams got closer” framing ($20/month AI access) is structurally inaccessible at Global South income levels.

Key Constraints

No safety nets; weak regulatory capacity; digital infrastructure gaps; zero leverage over AI labs; geographic and organizational distance from decision-makers; language and cultural barriers to “orchestration” roles.

Feedback Loops

Pricing death spiral; complementarity paradox → fewer workers → less training → less competitive; brain drain loop → trained workers emigrate → capacity erodes; macro-fiscal impact → BPO sector taxes decline → government fiscal capacity erodes.

Likely Actions

Industry associations lobbying for transition funding (likely gesture-scale); individual workers attempting to upskill with limited resources; migration to non-AI-exposed sectors where possible; growing political pressure in affected countries.

Falsifiers

BPO revenue decline >15% with proportional AI services growth. BPO campus vacancy rates. Remittance flow declines from outsourcing cities. Informal economy contraction in BPO-dependent regions.

Final Confidence: 0.73

Enterprise Buyers & Executives

Category: Implementers/operators | Impact: 8/10

Identity & Role

CTOs, COOs, managing partners who must decide adoption speed. They are the transmission mechanism — without their adoption decisions, the thesis does not materialize at scale. They balance productivity gains against workforce disruption, liability, compliance, and organizational stability.

Thesis Interpretation

The thesis conflates individual productivity gains (a developer’s personal experience) with organizational transformation. The gap between “AI can do X” and “our organization has deployed AI to do X at scale” is massive and poorly understood by technology enthusiasts, including the essay’s author.

Steelman Argument

The Adoption Paradox Funnel: 95% pilot failure, 56% CEOs getting “nothing,” yet 90%+ increasing spend. This is the most empirically grounded challenge to the thesis’s timeline. Enterprise adoption is friction-laden in ways the essay does not acknowledge: integration complexity, data quality, change management, liability, compliance, organizational culture.

Toulmin Breakdown

- Claim: Capability does not equal deployability, and the organizational constraints on AI adoption are binding on a 5-10 year timescale.

- Grounds: 95% pilot failure (MIT); 56% CEOs “nothing” (survey data); 63.7% no formalized initiative; 42% of companies scrapped AI initiatives in 2025.

- Warrant: Enterprise software adoption historically follows long cycles of failed pilots before standardization.

- Backing: Vendor lock-in concerns (88% API concentration in 3 providers); counterparty risk (OpenAI $14B projected losses); organizational disruption (42% say adoption is “tearing the company apart”).

- Qualifier: The thesis may bypass enterprise adoption entirely through AI-native startups that compete with incumbents at radically lower headcount. The narrative-displacement loop means enterprises may restructure based on expectations, not deployments.

- Rebuttals: (1) The 5% that succeed are market leaders who set industry norms — competitive pressure cascades from a few successful adopters. (2) Global South displacement shows what happens in the zero-friction case and provides a leading indicator.

Gains & Losses

Potential enormous productivity gains if adoption succeeds. Risks: premature workforce restructuring, vendor lock-in, counterparty risk, organizational disruption, reputational damage.

Key Constraints

Integration complexity; change management resistance; data quality; liability and compliance; vendor lock-in; counterparty risk (provider financial stability); organizational culture.

Feedback Loops

ROI Illusion Loop: perceived value → investment → inability to admit failure → more investment. Competitive cascade: one firm’s AI adoption → pressure on rivals → industry-wide restructuring. Narrative-reality feedback: announced intentions → market repricing → actual restructuring.

Likely Actions

Continued investment with increasing measurement rigor; targeted role elimination in clearly automatable functions; preservation of core human capabilities while building “AI readiness”; risk committee attention to counterparty and lock-in concerns.

Falsifiers

Fortune 500 achieving >30% workforce reduction via AI within 3 years. Enterprise pilot success rates reaching >20%. AI-native startup competitive displacement in major sectors.

Final Confidence: 0.72

AI Skeptics & Tech Critics

Category: Meta-level observers | Impact: 6/10

Identity & Role

Researchers, journalists, and public intellectuals who challenge AI hype cycles, question industry self-interest, and advocate for evidence-based assessment. Not anti-technology — anti-hype and pro-rigor.

Thesis Interpretation

The essay exhibits classic hype-cycle characteristics: insider urgency, unfalsifiable timeline claims, emotional manipulation (COVID analogy), undisclosed financial conflicts, and systematic exclusion of contradictory evidence. It functions as a “capital-raising environment” for the $89.4B AI VC ecosystem.

Steelman Argument

Three independent lines of evidence converge: (1) METR RCT — developers 19% slower, perceiving 20% faster; (2) NBER — 90% of firms no AI impact on productivity; (3) Faros — individual task gains absorbed by downstream bottlenecks (review +91%, bugs +9%). The productivity paradox is established, not hypothesized. The essay’s evidence is exclusively anecdotal and contaminated by the perception bias the METR study documents.

Toulmin Breakdown

- Claim: The essay’s timeline is overstated by a factor of 2-5x, its evidence base is contaminated by perception bias, and its narrative serves the author’s financial interests.

- Grounds: METR RCT; NBER firm-level study; Faros developer analytics; Brookings “no discernible disruption” 33 months post-ChatGPT; historical pattern of insider-driven technology urgency narratives.

- Warrant: The burden of proof for claims of unprecedented disruption lies with the claimant, and anecdotal evidence from interested parties does not meet that burden.

- Backing: Author is AI startup CEO/investor; $89.4B VC environment creates systematic amplification; every major technology has had a “this time is different” moment.

- Qualifier: The Skeptics’ position is most defensible for Western regulated professions and least defensible for Global South cost-arbitrage economies, where displacement is already measurable. Updated to dual-track assessment: BPO displacement is real and current; Western knowledge work displacement is overstated on 1-5 year timelines.

- Rebuttals: (1) AI is a general-purpose technology, unlike blockchain/metaverse — the better comparison is electricity or the internet, which did eventually transform the economy after long periods of overhyped expectations. (2) The narrative itself causes harm through the expertise depreciation spiral, even if the technology prediction is wrong.

Gains & Losses

Intellectual vindication if hype deflates; reputational risk if AI transformation proves faster than predicted. No direct economic exposure.

Key Constraints

Being dismissed as Luddites; difficulty proving a negative; their own evidence (METR RCT) is already out of date relative to the newest models.

Feedback Loops

Hype-capital spiral: AI narrative → VC investment → more narrative → more investment (until capital patience breaks). The Skeptics’ intervention can only slow this loop, not stop it, because the financial incentives overwhelm the epistemic corrections.

Likely Actions

Continued publication of rigorous counter-evidence; advocacy for controlled studies with current models; public engagement to temper panic-driven decision-making.

Falsifiers

METR RCT replication with Feb 2026 models showing 20%+ speedup (overturns centerpiece evidence). BLS knowledge-worker employment declining 5%+ (confirms displacement at scale). Sustained exponential capability benchmarks AND matching enterprise deployment gains within 2 years (validates “this time is different” claim).