The Shift to Automated Agentic Coding

Prepare for the coming shift in software development.

By the end of the year software development will not be primarily the act of typing code. It will be the act of designing intent.

Over the holidays at the end of 2025 there was a lot of hype about automated agents writing code that is never read, reviewed, or maintained by a human. Over the last few month’s I’ve spent a lot of time using agentic coding to develop serveral applications (such as https://guidedsurveys.com) and I’m here to say I do not believe it is just hype. I expect most new successful projects to be written by autonomous agents.

Systems, based on and similar to, Claude Code are not just helping developers with autocomplete or smart snippets - they are planning entire features, generating and reviewing code for sub-steps, refactoring sub-systems, writing tests, updating documentation, and committing code across dozens of files in a single pass.

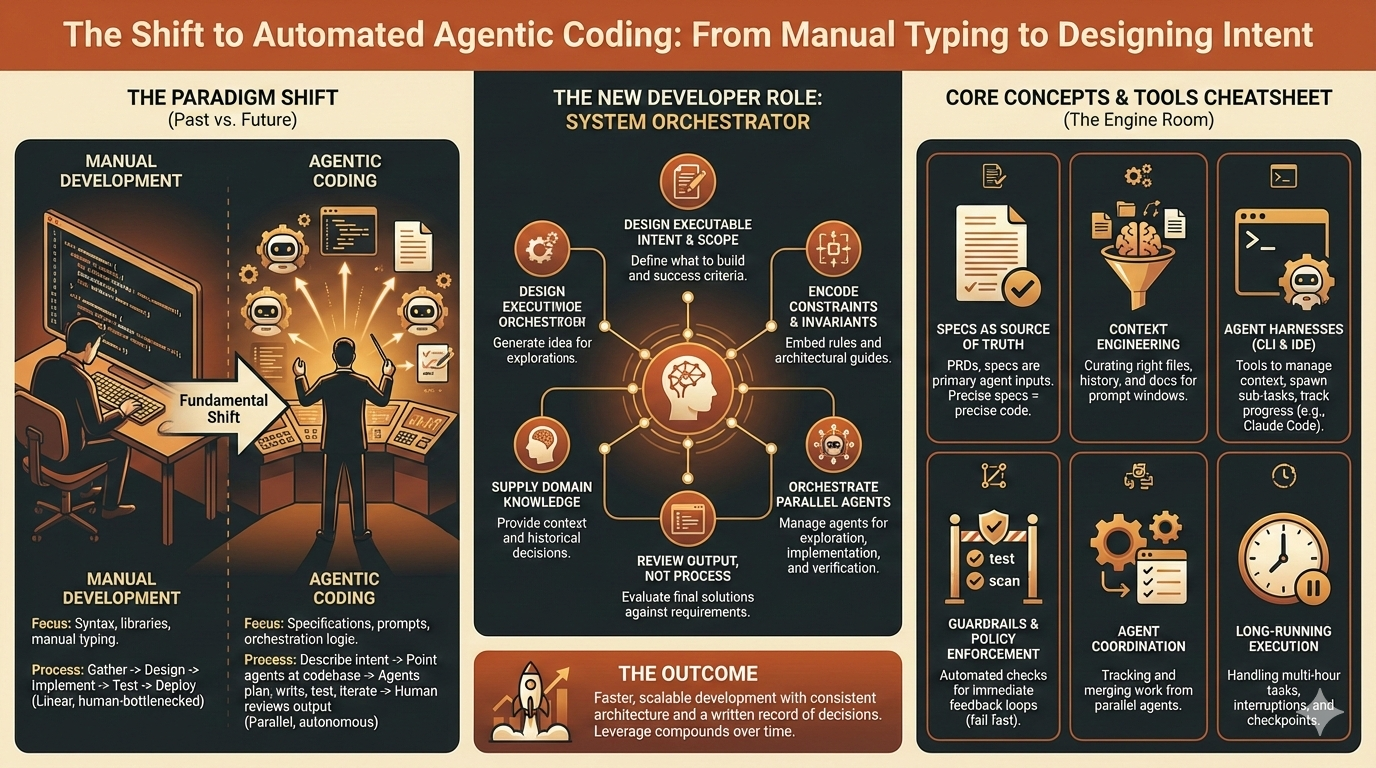

The result is a fundamental shift in what "coding" means.

I’ve spent ~40 years learning syntax, studying libraries and APIs, working through architectures and manually typing code line by line. I think that is going away for the most part and instead I’ll be managing teams of agents and directing them to do in days or hours what would have taken me weeks or months.

We’ve moved from writing binary → assembly → C → Python, Ruby and JavaScript, from sed → vi → IDEs, and more recently from tab completion → smart snippets → prompt engineering. At each change we had to adjust how we do our work.

Now we’re entering context engineering and agent management where the quality of product requirement documents, specs, style guides, architectural decision rules and records, and other historical decisions matters more than any single prompt. The next frontier: fully autonomous, multi-agent systems that plan, explore, implement, test, and deploy software (directed by humans but) with minimal human intervention is on the horizon.

Traditional Manual Development Process

Before autonomous agents and context engineering the process looked like this at a high level:

- Requirements gathering – product managers, stakeholders, or customers articulate needs through documents, tickets, or meetings.

- Design – engineers and designers translate requirements into architecture diagrams, API contracts, and UI mockups.

- Implementation – developers manually write code, file by file, reasoning locally about logic and side effects.

- Testing – unit tests, integration tests, and QA cycles catch regressions and edge cases.

- Deployment – code is merged, released, monitored, and iterated on.

For some domains like, kernel work, real-time systems, or safety-critical software, human involvement and oversight will remain critical. However for others, for most, that process has limits that will cause you to struggle with consistency, exploration, latency and other cognitive bottlenecks.

In the agentic model, you describe what you want built, point the agent at your codebase, and let it plan the implementation, write the code, run the tests, and iterate until it passes. You review/approve the output, not the process.

Agentic coding does not reduce the need for good plans, complete requirements, sound architecture, or rigorous testing. It actually increases their value and moves human attention to planning instead of ‘coding’. Consequently the developers focus shifts from functions to specifications, prompts, and orchestration logic. Code still exists and still matters, but it will be written by LLMs fed the carefully engineered contexts.

From Code Author to System Orchestrator

As developers we need to start getting comfortable with the idea that our jobs will consist more of:

- Designing executable intent

- Defining the scope and success criteria of a task

- Encoding constraints and invariants

- Supplying architectural guides, and domain knowledge

- Orchestrating parallel agents to explore, implement, and verify solutions

- Having other agents explore and improve the code and tests and ensure everything meets requirements.

If its’ not in the spec (development documents), it doesn’t get in the tests and it doesn’t exist.

The core skill is no longer syntax mastery, but systems thinking. Developers who adapt to this shift move faster, scale further, and exert greater leverage than those who continue to focus solely on manual implementation.

Autonomous CLI Harnesses as the New Interface

Which means IDEs are no longer the main tool for developers. Sure, we’ll still have one for occasionally inspecting something or reviewing a configuration setting but most of our work will be in text editors and command line agent harnesses specifying the project and directing traffic.

These harnesses will help us:

- Inject relevant code, documentation, and history into model context

- Spawn sub-agents to explore specific domains or edge cases

- Track long-running tasks and partial results

- Apply guard-rails such as linting, formatting, and policy checks

We won’t use them to craft and issue prompts by hand but rather to manage and monitor progress.

Core Concepts

Heads up: this landscape shifts daily. There are no settled best practices yet, and the “right” choice this month (week) might be obsolete by next quarter (tomorrow). That said, here’s what you’ll need to wrap your head around:

Specs as Source of Truth - PRDs, specs, tasks, epics—these aren’t just planning artifacts anymore. They’re the primary input your agents consume. A vague requirement produces vague code. A precise spec with clear acceptance criteria produces something you can actually ship. The discipline you put into documentation directly determines the quality of what comes out the other side.

Context Engineering - This is the art of feeding your agents the right information at the right time. Too little context and they hallucinate. Too much and they get confused or hit token limits. You’ll spend real time thinking about which files, docs, and history snippets belong in each prompt window.

Agent Harnesses - Claude Code, Cursor, Codex, and others—these are the command-line and IDE tools that wrap LLMs and give them agency. They manage context injection, spawn sub-tasks, and track progress. Picking one matters less than learning the patterns they share.

Skills, Custom Commands, Hooks, and MCPs - Most harnesses let you extend their behavior. Custom commands encode repeatable workflows. Hooks trigger actions at specific points (pre-commit checks, post-generation linting). MCPs (Model Context Protocols) connect agents to external tools and data sources. This is where you encode your team’s standards.

Agent Coordination - When you spawn multiple agents—one exploring an edge case, another refactoring a module, a third writing tests—you need a way to track and merge their work. Some teams use markdown task lists. Others use more sophisticated systems like Beads. The tooling is immature but the problem is real.

Guardrails and Policy Enforcement - Agents can confidently produce garbage if you let them. Linting, type checking, test suites, and security scans aren’t optional—they’re your feedback loop. Run them automatically. Fail fast. Make the agent fix its own mistakes before you ever see them.

Long-Running Execution - Some tasks take hours. Your harness needs to handle interruptions, checkpoint progress, and resume gracefully. This is operational work that feels unfamiliar if you’re used to quick feedback loops.

The benefits here go beyond speed. You get consistency across a growing codebase, coherence in architectural decisions, and—almost as a side effect—a written record of why things were built the way they were. Organizations that master this gain leverage that compounds over time.

Where to Start

If you’re curious but overwhelmed, here’s what I’d do: pick up Claude Code. There are other harnesses (OpenCode is my second choice), but Claude Code is polished enough that you won’t fight the tooling while you’re learning the concepts. That also means using Opus 4.5 or whatever the current flagship is when you read this. Don’t overthink model selection yet.

For spec-driven workflows, look at OpenSpec, SpecKit, or BMAD-Method depending on how formal you want to get. BMAD is heavier but more structured. SpecKit is lighter. Try one and see if it clicks. If the process clicks for you, you may end up writing your own custom tailored to your prferences, projects and workflow.

Don’t sleep on custom commands and skills—they’re easier to set up than you’d think, and they’re where you encode your own patterns. Find the MCPs that fit your stack (Playwright for testing, Context7 for documentation, database connectors, whatever you need). Set up guardrails early: hooks to disallow commands, linting, type checking, and a test runner that agents can invoke.

Then try to build something small. Not so small that an LLM can one-shot it, but scoped enough that you can finish it in a few sessions. A CLI tool, a simple API, a dashboard. The constraint: don’t write a single line of code yourself.

You’ll hit frustrating moments. The agent will misunderstand you, or produce something bizarrely wrong, or spin in circles. When that happens, resist the urge to just fix the code manually. Step back. Reframe the problem. Write a better spec. That’s the skill you’re building.

Conclusion

Software development isn’t dying. It’s moving up the stack.

The developers who thrive in this shift will be the ones who can extract and understand requirements, frame problems clearly, write specs that leave no room for ambiguity, and evaluate whether what came out actually solves the problem. That means product thinking, systems thinking, and communication skills matter more than they used to. The best “coders” of the next few years might spend most of their time writing prose.

This isn’t a loss. It’s change. And we can change with it.

References & Resources

- Claude Code — https://code.claude.com/docs/en/overview

- Codex product page — https://openai.com/codex

- OpenSpec - https://openspec.dev

- SpecKit - https://speckit.org

- BMAD-Method - https://github.com/bmad-code-org/BMAD-METHOD

- Playwright — https://playwright.dev/

- Context7 — https://github.com/upstash/context7

- Opus 4.5 (Claude Opus 4.5) — https://www.anthropic.com/news/claude-opus-4-5

- GPT 5.2 — https://openai.com/index/introducing-gpt-5-2/

- Gemini 3 Pro — https://deepmind.google/models/gemini/pro/

- Ralph (Wiggum) Loop - https://github.com/anthropics/claude-plugins-official/tree/main/plugins/ralph-loop

- Gastown / Gas Town — https://github.com/steveyegge/gastown

- Automaker — https://automaker.app/

- Beads — https://github.com/steveyegge/beads