Agentic Multi-Stakeholder Analysis

A system that uses multiple LLM agents each representing a different stakeholder perspective to analyze articles, debate each other's positions, and produce structured memos that stress-test arguments from every angle that matters. For anyone who cares about decision quality and wants to see what a convincing argument looks like after it survives real scrutiny.

Most arguments sound convincing in isolation. The issue isn’t that they’re wrong — it’s that they’re incomplete. They optimize for one stakeholder’s perspective and treat everyone else’s concerns as externalities. A well-argued case for AI optimism doesn’t mention the displaced copywriter who can’t make rent. A well-argued case for regulation doesn’t mention the startup founder who can’t ship because compliance costs more than their product.

I wanted a tool that forces arguments through the gauntlet of competing perspectives.

So I built one. A system that uses a group of LLM agents — each primed with a different stakeholder viewpoint and equipped with research tools — to analyze a source article, express their reactions, and then debate each other’s positions.

Feed it a thesis, a proposal, a strategy memo, an opinion piece, and watch it get picked apart from every angle that matters. The output isn’t a summary. It’s a structured memo with a thesis teardown, conflict mapping, system stability analysis, falsifiers, conditional theses, and unresolvable tradeoffs — the kind of analysis that goes far deeper than the original piece.

How It Works

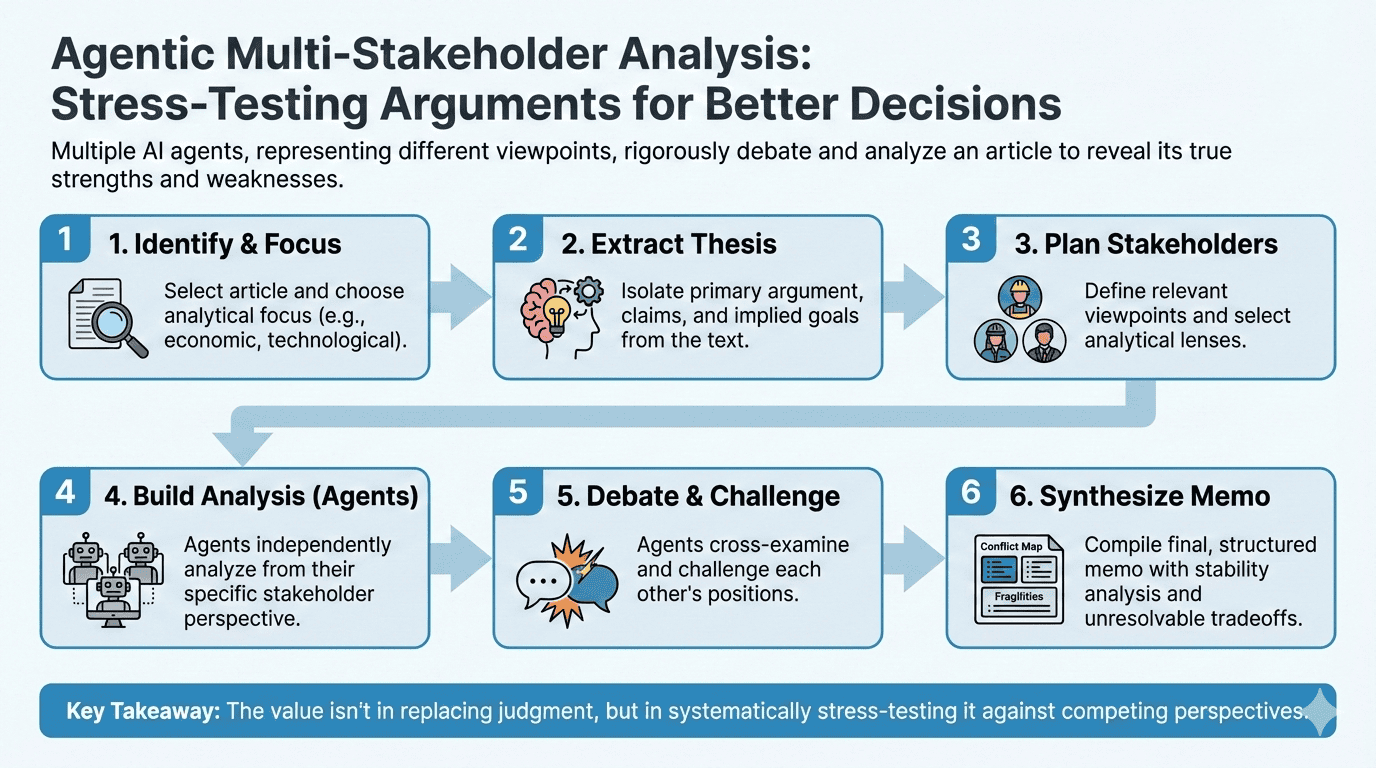

1. Article and Focus Identification. I find an article worth analyzing, choose a focus (technological feasibility, economic impact, geopolitical implications, etc.), and hand both to the system.

2. Thesis Extraction. The system reads the document and extracts a structured thesis: primary argument, secondary claims, implied goal if adopted, and interpretation notes where the argument is ambiguous. This grounds the entire analysis — without an explicit thesis, agents would each interpret the document differently and talk past each other.

3. Stakeholder Planning. The system considers ten stakeholder categories as a checklist:

- Direct beneficiaries and directly harmed parties

- Implementers and regulators

- Competitors and capital providers

- Long-term and broader system stakeholders

- Marginalized voices and meta-level observers

Not all ten apply to every thesis, so the system selects five to seven that are genuinely relevant and ranks them by impact. It also selects analytical lenses — argument logic, incentives and power dynamics, constraints, systems dynamics, time horizon, cognitive biases, risk under stress, and normative tensions.

4. Team Assembly. The system spawns one agent per stakeholder. Each agent receives the stakeholder-analyst definition (a set of behavioral rules for producing rigorous analysis), the thesis, the full source document, the team roster, and their Round 1 objective.

5. Analysis & Debate (Rounds 1–3). Each stakeholder builds their analysis over three rounds, covering:

- Identity, thesis interpretation, steelman argument, and a full Toulmin breakdown

- Gains and losses, power shifts, constraints (legal, resource, organizational, psychological, technical), and cognitive biases

- Feedback loops, externalities, time horizons, likely actions, and falsifiers

Cross-Stakeholder Debate (2–4 rounds). Agents read each other’s analyses and debate. They challenge specific claims, question evidence, and identify where their interests genuinely conflict versus where they align. The debate continues until the coordinator judges that agents are repeating themselves and the discussion has stalled.

6. Synthesis. The coordinator produces the final memo: stakeholder analyses assembled, conflicts mapped, system stability assessed, fragilities ranked by severity, the thesis reconstructed based on what survived scrutiny, unresolvable tradeoffs documented, and decision guidance provided.

Examples

The Consiglieri page has examples, and I’ll add more over time as I refine the process. The first three are an analysis of Something Big is Happening by Matt Shumer alongside two rebuttal pieces: Tool Shaped Objects by Will Mandis and AI isn’t coming for your future. Fear is. by Connor Boyack.

Why Bother?

I built this because I care about decision quality. I read constantly about technology, policy, strategy, and incentives. Some people think AI-generated analysis is just slop — that models can’t do real critical thinking. I disagree. These agents aren’t producing original insight from nothing; they’re systematically applying analytical frameworks across perspectives in a way that’s genuinely hard for a single reader to do alone. The value isn’t in replacing your judgment. It’s in stress-testing it.

The outputs are long — often much longer than the original article. I’m working on tightening them. But I’d rather have a thorough analysis I can skim than a polished summary that missed something important.

I hope you’ll consider building something similar to strengthen your own research and decision-making.